Bulk Loading Data via a PowerShell Script in Flyway

The test data management strategy for any RDBMS needs to include a fast, automated way of allowing developers to "build-and-fill" multiple copies of any version of the database, for ad-hoc or automated testing. The technique presented in this article uses a baseline migration script to create the empty database at the required version, which then triggers a PowerShell callback script that bulk loads in the right version of the data.

This article is part of a series on managing test data in a Flyway development:

- Better Database Development: The Role of Test Data Management

- A Test Data Management Strategy for Database Migrations

- Dealing with Database Data and Metadata in Flyway Developments

- Adding Test Data to Databases During Flyway Migrations

- Getting Data In and Out of SQL Server Flyway Builds

- Bulk Loading of Data via a PowerShell Script in Flyway

- Managing Test Datasets for a Database: What’s Required?

- Managing Datasets for Database Development Work using Flyway

Also relevant are the series of related articles on Database testing with Flyway.

Why would a database developer need anything other than SQL INSERT statements to generate or load test data? Well, there are at least two points in any database build or migration process where you might need a better approach.

The first is when you need to load a high volume of data at a reasonable speed, such as for performance testing. Ideally, the quantity of data will be in the same order of magnitude as the actual or expected production data. If you attempt to load high volumes of data with individual INSERT statements, it will slow down the build dramatically. Even with the latest iteration of the Pubs practice database, I can reduce the build time from twenty minutes (INSERT scripts) to under a minute (bulk copy).

Each database system has its own way of doing bulk data import and export; it is a general requirement. SQL Server has BCP and I demonstrated how to use it to bulk load test data for a Flyway build process in Getting Data In and Out of SQL Server Flyway Builds. Likewise, PostgreSQL has its COPY command, , MySQL its LOAD DATA INFILE or mysqlimport utility, Oracle has its BULK INSERT, BULK COLLECT and FORALL, and so on. Data files won’t necessarily be stored on the machine hosting the database, and so a script executed on a workstation is much better placed for the task than a SQL batch.

The second task that usually needs to be scripted is the generation of randomized data for development work. Again, you need plenty of it, because every bit of SQL you ever write looks great until it faces a tsunami of data. Although it’s possible to generate data using SQL, the process is much easier in a scripting language because you are likely to need to keep many different data banks on file to use for different test cases. I tend to use a great deal of typical text as a resource, which I reduce to Markov tables to create fake but convincing data. All this data needs to be imported rapidly into the database.

Any system for handling bulk data boils down to getting data in and pulling data out. These two activities are best contrived as two matching processes, import and export, because most bulk loading systems have a lot of options for the formatting of data, and infinite possibilities in the naming of data files.

Importing the right version of the data

In any relational database, data is likely to be version-specific: when you are importing data at speed, it will only squeeze into the tables for which it was designed. When you are doing automated testing, the data must be correct for the test run because you will be checking the result to ensure it is what is expected.

There isn’t necessarily a one-to-one ratio of data edition to version; you may need more than one dataset for each version. You generally only hit problems with the data if you change the base tables in any way. The more mature a database becomes, the less inevitable these table changes become, and the more likely that one data set will last for several versions. However, there will inevitably be mapping of database version and data version at some point in the database lifecycle. To accommodate this, I’ll introduce a method that is reasonably flexible.

Creating the test data

There are many ways to create test data. You can, for example, start with a fresh build without data, anonymize real production data or top up existing development data. Data generators are often scripted or come as tools. Whatever you use, they come with a ‘plan’ of how to generate data for each column, and how the data interrelates. I’ve written several articles on how to do this, and I often use SQL Data Generator for the job and then transfer the data to whatever RDBMS I’m currently using. Whichever way you do it, you then must create the file-based storage for the table data. Although BCP can insert CSV files, SQL Server’s fastest way loading of data, for example, is using native BCP, meaning that the data is in the binary format required. PostgreSQL’s COPY command is similar in that respect.

As discussed earlier, we need to get data in and out of a Flyway-managed database in such a way that we know the database version to which it relates. If we can do this, then it means that we can create test data for automated post-migration integration tests. This may seem a paradox, but the integration build of the database will be done after the unit testing in development, so the test data is likely to already exist that is needed for the type of automated testing done during deployment.

I’ll explain how to deal with some of these complications by illustrating it with a development scenario

Scenario: integration testing after a merge

Let’s imagine that work on a feature branch is complete, and the team have devised a single migration script to merge the changes into the parent branch, to create the new version. However, before committing the script to the parent, Develop, say (or to main if this is an external release), they want to perform sufficient integration testing to be confident that the migration script will work as expected, preserving all existing data, and that the resulting new version works to requirements.

One possible workflow for this will start by creating a private, empty ‘test build’ of the current database version (in Develop). They can do this using a ‘repeatable’ build in Flyway community but a better way is to use a baseline migration (a.k.a. state) script in Flyway Teams.

The team bulk load it with test data, and then apply their migration script containing the feature changes, which will also need to migrate existing data and add any new data as required. This enables them to run enough integration tests to be sufficiently confident to update the Develop branch with the migration script. This triggers the build of the new version and, assuming all is well, they can then export and save the data for the new version, as well as create a baseline migration for the new version. Automated and ad-hoc testing is now easy because the existing databases can be upgraded as usual, with a migration script, and newly created databases, whether they are on new docker images or traditional database servers, can be built rapidly at the correct version.

This is just one example, and your own workflow will vary according to the type of database and its importance to the organization. Staging will have rather different requirements. However, one thing is certain: to do testing you need to get data in and out rapidly.

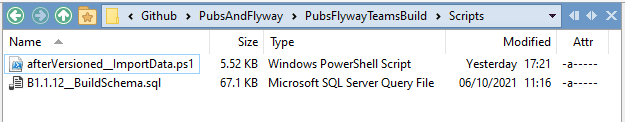

For our demo of this workflow, we set up our branch project. Here is the Scripts subdirectory:

It contains a Baseline Migration script, which just builds the schema but inserts no data. It’s rather like doing a traditional build except that Flyway gives the resulting database is given the correct version number and maintains a version history, so that we never lose track of what is where. Therefore, when you create this “B” script, make sure you don’t include the Flyway history table as that would confuse Flyway. Also, remove any extended properties to do with the database.

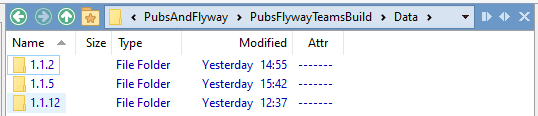

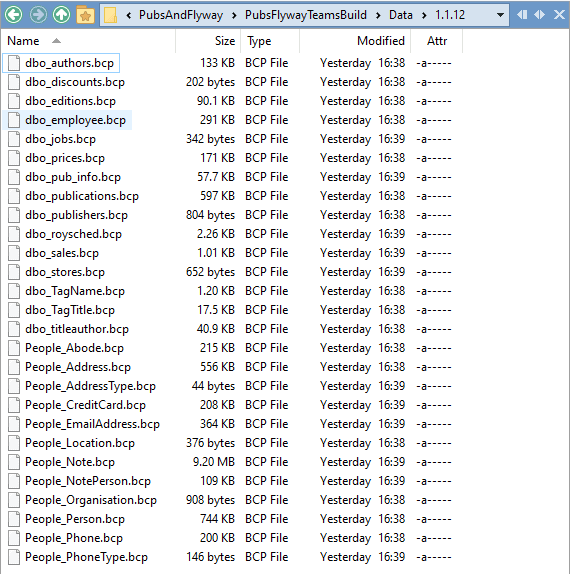

The second file, a PowerShell callback script, is called once the migration has been done. It determines the version reached, and then looks to see if there is an associated data directory with the bulk data in it. We’ve chosen to store this data within the project. Fortunately, we already have it so it can be imported:

You’ll notice BCP files in different files for different versions, each in their own directory. If a data set for the version exists, it fills the database with it. Otherwise, it looks for next lowest version that does have a data folder, assuming that the new data is saved at the version where the structure of the data last changed. The script imports the data into the correct tables.

When we do the Flyway migration, we get the following output:

Successfully validated 1 migration (execution time 00:00.158s) Creating schema [classic]… Creating schema [people… Creating Schema History table [PubsSix].[dbo].[flyway_schema_history] ... Current version of schema [dbo]: null Migrating schema [dbo] to version "1.1.12 - BuildSchema" Executing script callback: afterVersioned - ImportData Executing powershell S:\work\Github\PubsAndFlyway\PubsFlywayTeamsBuild\Scripts\afterVersioned__ImportData.ps1 scriptblocks and cmdlet loaded. V1.2.40 Executed FetchAnyRequiredPasswords Executed GetCurrentVersion Executed BulkCopyIn Successfully applied 1 migration to schema [dbo], now at version v1.1.12 (execution time 00:50.149s)

We then do an info action

---------------------------------------- Flyway Teams Edition (10 schemas) 8.0.1 by Redgate licensed to red-gate.com (license ID c6c1a4e9-632f-4cd0-8f57-c27e360e0341) until 20 24-08-24 ---------------------------------------- Schema version: 1.1.12 +-----------+---------+------------------------------+--------------+----------+----------+ | Category | Version | Description | Type | State | Undoable | +-----------+---------+------------------------------+--------------+----------+----------+ | | | << Flyway Schema Creation >> | SCHEMA | Success | | | Versioned | 1.1.12 | BuildSchema | SQL_BASELINE | Baseline | No | +-----------+---------+------------------------------+--------------+----------+----------+

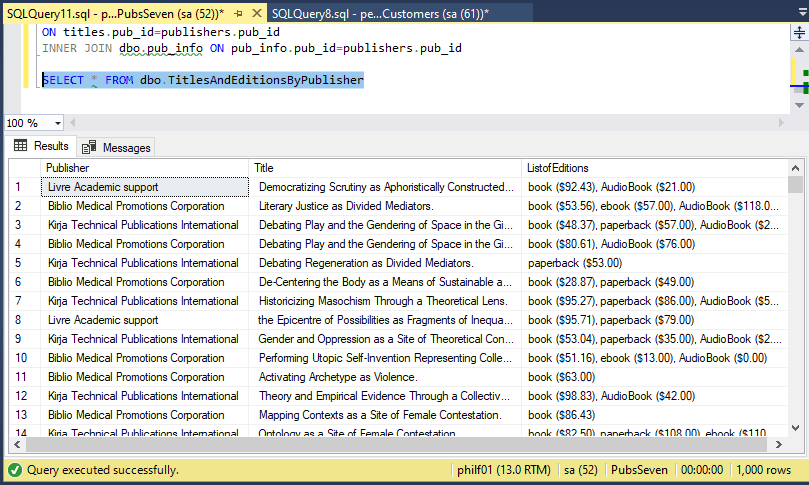

Seems OK. We take the database for a quick spin just to make sure that the data is in there OK.

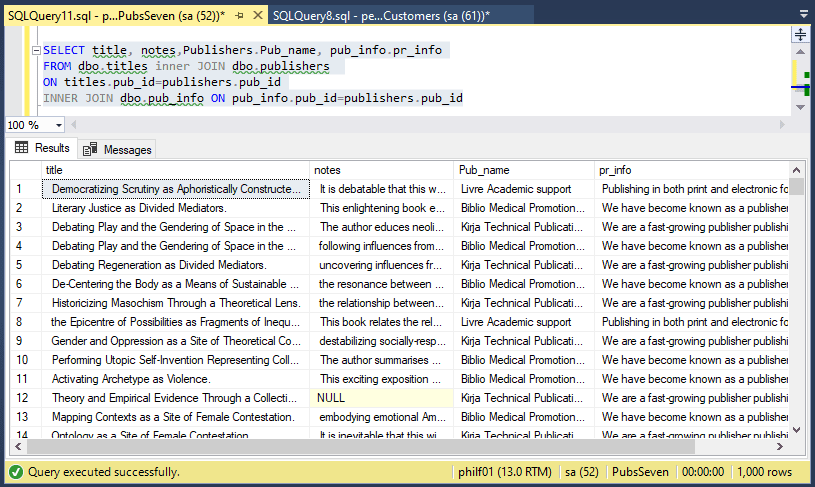

We can review some details about or books and their publishers:

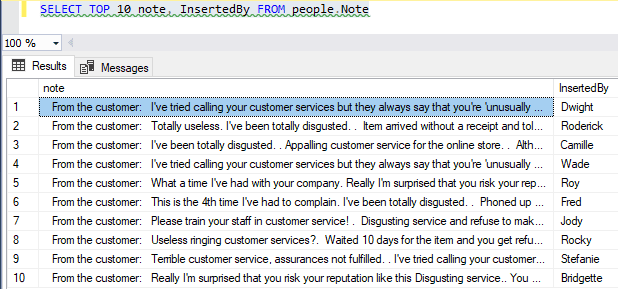

And the bitter complaints of our less than satisfied customers…

So, all you now need is a directory with all the connection information for all the databases that need to be provisioned at that version, and away you go. In terms of the build, it is remarkably quick, so can be used for provisioning as many databases as you need as quickly as using Docker images.

Running the Flyway Build

We’ll start with the script that executes Flyway Teams to do the ‘variant build’ process, using our baseline migration script. This is very similar to any other code for executing Flyway. You can use your preferred method such as using environment variables.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 |

<# tell PowerShell where Flyway is. You only need to do this if your flyway installation has not provided a path command. You need to change that to the correct path for your installation. #> Set-Alias Flyway 'C:\ProgramData\chocolatey\lib\flyway.commandline\tools\flyway-7.15.0\flyway.cmd' -Scope local #specify the DSN, and create the ODVC connection # and here are our project details. The project folder $ProjectFolder = '<PathToProjectFolder e.g. PubsAndFlyway\PubsFlywayTeamsBuild>' $Server = '<MyServerName>'; $Schemas = '<MyListOfSchemas>'; $Database = '<MyDatabaseName>'; <# you only need this username and password if there is no domain authentication #> $username = '<MyUserName>' $port = '<MyPortNumber>' # add a bit of error-checking. are the various directories there @{'Project Directory'="$ProjectFolder"; 'Data Directory'="$ProjectFolder\Data"; 'Scripts Directory'="$ProjectFolder\Scripts"}.GetEnumerator()|Foreach{ if (-not (Test-Path $_.Value)) { Write-Error "Sorry, but I couldn't find a $($_.Name) at the $($_.Value) location" } } <# we will need to retrieve credentials #> if ($username -ne '') #then it is using SQL Server Credentials { # we see if we've got these stored already $SqlEncryptedPasswordFile = "$env:USERPROFILE\$($username)-$Server-sqlserver.xml" # test to see if we know about the password in a secure string stored in the user area if (Test-Path -path $SqlEncryptedPasswordFile -PathType leaf) { #has already got this set for this login so fetch it $SqlCredentials = Import-CliXml $SqlEncryptedPasswordFile } else #then we have to ask the user for it (once only) { # hasn't got this set for this login $SqlCredentials = get-credential -Credential $UserName # Save in the user area $SqlCredentials | Export-CliXml -Path $SqlEncryptedPasswordFile <# Export-Clixml only exports encrypted credentials on Windows. otherwise it just offers some obfuscation but does not provide encryption. #> } $FlywayBuildArgs = @("-url=jdbc:sqlserver://$($Server):$port;databaseName=$Database", "-locations=filesystem:$ProjectFolder\Scripts", <# the migration folder #> "-user=$($SqlCredentials.UserName)", "-password=$($SqlCredentials.GetNetworkCredential().password)") } else { $FlywayBuildArgs = @("-url=jdbc:sqlserver://$($Server):$port;databaseName=$Database;integratedSecurity=true". "-locations=filesystem:$ProjectFolder\Scripts")<# the migration folder #> } $FlywayBuildArgs += <# the project variables that we reference with placeholders #> @( "-schemas=$Schemas", "-placeholders.schemas=$Schemas", #This isn't passed to callbacks otherwise "-placeholders.projectDescription=$ProjectDescription", "-placeholders.projectName=$ProjectName") cd "$ProjectFolder\scripts" #if this works we're probably good to go Flyway @FlywayBuildArgs info -teams #then try it out! Flyway @FlywayBuildArgs clean -teams Flyway @FlywayBuildArgs migrate |

The PowerShell Callback to insert the data

This is the afterVersioned_ImportData.ps1 callback script and so Flyway will execute it after every versioned migration script that runs successfully during a Migrate. In our example, it will run after the B1.1.12 build script completes.

The first part of the script gathers the data we need, passed in from environment variables and various placeholders, and then the second part uses this data to execute some tasks from our DatabaseBuildAndMigrateTasks.ps1 library of functions, including getting the current version number ($GetCurrentVerison task) and then bulk copying in the data for that version ($BulkCopyIn task).

By keeping all the routines in a library file, it means all my callback scripts look very similar. It also makes it easy to add extra tasks into the callback rather than have more than one callback on the same event. See Scripting with Flyway Teams and PowerShell for more details about how this sort of callback script works.

I’ll present the code here, but by the time you read this, a more up-to-date version of this script is likely to be in the GitHub directory.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 |

<#To set off any task, all you need is a PowerShell script that is created in such a way that it can be executed by Flyway when it finishes a migration run. Although you can choose any of the significant points in any Flyway action, there are only one or two of these callback points that are useful to us. This can be a problem if you have several chores that need to be done in the same callback or you have a stack of scripts all on the same callback, each having to gather up and process parameters, or pass parameters such as the current version from one to another. A callback script can't be debugged as easily as an ordinary script. In this design, the actual callback just executes a list of tasks in order, and you simply add a task to the list after you've debugged and tested it & placed in the DatabaseBuildAndMigrateTasks.ps1 file. Each task is passed a standard 'parameters' object. This keeps the 'complexity beast' snarling in its lair. The parameter object is passed by reference so each task can add value to the data in the object, such as passwords, version number, errors, warnings and log entries. All parameters are passed by Flyway. It does so by environment variables that are visible to the script. You can access these directly, and this is probably best for tasks that require special information passed by custom placeholders, such as the version of the RDBMS, or the current variant of the version you're building run the library script, assuming it is in the project directory containing the script directory #> . "..\DatabaseBuildAndMigrateTasks.ps1" <# The most useful data passed to this script by Flyway is the URL that you used to call Flyway. This is likely to tell you the server, port, database and the type of database (RDBMS). We can use the URL if we just want to make JDBC calls. We can't and don't. Instead we extract the connection details and use these. #> $FlywayURLRegex = 'jdbc:(?<RDBMS>[\w]{1,20})://(?<server>[\w\-\.]{1,40})(?<port>:[\d]{1,4}|)(;.+databaseName=|/)(?<database>[\w]{1,20})' #this FLYWAY_URL contains the current database, port and server so it is worth grabbing $ConnectionInfo = $env:FLYWAY_URL #get the environment variable if ($ConnectionInfo -eq $null) #OMG... it isn't there for some reason { Write-error 'missing value for flyway url' } <# a reference to this Hashtable is passed to each process (it is a scriptBlock) so as to make debugging easy. We'll be a bit cagey about adding key-value pairs as it can trigger the generation of a copy which can cause bewilderment and problems- values don't get passed back. Don't fill anything in here!!! The script does that for you#> $DatabaseDetails = @{ 'RDBMS'=''; # necessary for systems with several RDBMS on the same server 'server' = ''; #the name of your server 'database' = ''; #the name of the database 'version' = ''; #the version 'ProjectFolder' = ''; #where all the migration files are 'project' = ''; #the name of your project 'projectDescription'=''; #a brief description of the project 'flywayTable'='';#The name and schema of the flyway Table 'uid' = ''; #optional if you are using windows authewntication 'pwd' = ''; #only if you use a uid. Leave blank. we fill it in for you 'locations' = @{ }; # for reporting file locations used 'problems' = @{ }; # for reporting any big problems 'warnings' = @{ } # for reporting any issues } # for reporting any warnings if ($ConnectionInfo -imatch $FlywayURLRegex) { $DatabaseDetails.RDBMS = $matches['RDBMS']; $DatabaseDetails.server = $matches['server']; $DatabaseDetails.port = $matches['port']; $DatabaseDetails.database = $matches['database'] } else { write-error "failed to obtain the value of the RDBMS, server, Port or database from the FLYWAY_URL" } $DatabaseDetails.uid = $env:FLYWAY_USER; if ($env:FP__projectName__ -ne $null) {$DatabaseDetails.Project = $env:FP__projectName__;} if ($env:FP__projectDescription__ -ne $null) {$DatabaseDetails.ProjectDescription = $env:FP__projectDescription__}; $DatabaseDetails.ProjectFolder = split-path $PWD.Path -Parent; if ($env:FP__flyway_defaultSchema__ -ne $null -and $env:FP__flyway_table__ -ne $null) {$DatabaseDetails.flywayTable="$($env:FP__flyway_defaultSchema__).$($env:FP__flyway_table__)"} else {$DatabaseDetails.flywayTable='dbo.flyway_schema_history'}; <# You can dump this array for debugging so that it is displayed by Flyway #> $DatabaseDetails|convertTo-json <# these routines write to reports in "$($env:USERPROFILE)\Documents\GitHub\$( $param1.EscapedProject)\$($param1.Version)\Reports" and will return the path in the $DatabaseDetails if you need it. Set it to whatever you want in the file DatabaseBuildAndMigrateTasks.ps1 You will also need to set SQLCMD to the correct value. This is set by a string $SQLCmdAlias in ..\DatabaseBuildAndMigrateTasks.ps1 below are the tasks you want to execute. Some, like the on getting credentials, are essential before you execute others In order to execute tasks, you just load them up in the order you want. It is like loading a slide-deck. #> $PostMigrationTasks = @( $FetchAnyRequiredPasswords, #checks the hash table to see if there is a username without a password. #if so, it fetches the password from store or asks you for the password if it is a new connection $GetCurrentVersion, #checks the database and gets the current version number #it does this by reading the Flyway schema history table. $BulkCopyIn # read the contents of the files into the tables ) Process-FlywayTasks $DatabaseDetails $PostMigrationTasks |

To output the BCP data

In our earlier scenario, the developers would now apply the migration script for the new feature, and then run all their tests to check out the new version. Once all this is complete, they can then can BCP it out to a directory so that it’s ready to use for other developers who need a quick build of the new version.

This is even simpler because it doesn’t have to call Flyway. To create a BCP Data directory you just execute this code. It checks the version of the database before it saves the BCP Data. Because we use the same logic to save the file as we do to read the file, we can be sure that the right table gets the data.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

$Details = @{ 'server' = '<MyServer>'; #The Server name 'database' = '<MyDatabaseName>'; #The Database 'uid' = '<MyUserName>'; #The User ID. you only need this if there is no domain authentication or secrets store #> 'pwd' = ''; # The password. This gets filled in if you request it 'ProjectFolder'='' ;#The folder containing the project 'version' = ''; # TheCurrent Version. This gets filled in if you request it 'Warnings' = @{ }; # Just leave this be. Filled in for your information 'Problems' = @{ }; # Just leave this be. Filled in for your information 'Locations' = @{ }; # Just leave this be. Filled in for your information } # add a bit of error-checking. Is the project directory there if (-not (Test-Path "$($Details.ProjectFolder)")) { Write-Error "Sorry, but I couldn't find a project directory at the $($Details.ProjectFolder) location" } # ...and is the script directory there? if (-not (Test-Path "$($Details.ProjectFolder)\Scripts")) { Write-Error "Sorry, but I couldn't find a scripts directory at the $($Details.ProjectFolder)\Scripts location" } cd "$($details.ProjectFolder)\scripts" . ..\DatabaseBuildAndMigrateTasks.ps1 # now we use the scriptblock to determine the version number and name from SQL Server $Details.problems=@{} Process-FlywayTasks $Details @( $FetchAnyRequiredPasswords, #deal with passwords $GetCurrentVersion, #access the database to work out the current version $BulkCopyOut #now write out the contents of all the tables ) |

Conclusions

When databases grow up, the insertion of data can become a drag because it takes so long. Database builds soon become overnight builds. By instead inserting the data separately in a single process, you can increase the speed of database builds by orders of magnitude. This requires a slightly different development culture, but can bring benefits, especially in standardizing the data for each version, but allowing different datasets for testing a particular version.

The great advantage of the migration approach is that each version script migrates both data and metadata together. By the time the script is required to update a production server, it will be well-tested. The same isn’t true of development that is done purely with static database scripts like the code illustrated here, because this approach would require an additional script to update a production system from its current version to be identical to the new release version whilst preserving its production data. No database methodology can avoid using a migration for the update of the production database.

The Baseline script in this article takes the database from zero to 1.1.12. In reality, these can only be used for doing just that, as required for provisioning development or test databases from zero rapidly up to that version. You will always still need to rely on migration scripts for updating existing versions of the database.

Static scripts generally don’t include data insertions except possibly for enumerations that never change. Metadata is clearly distinguished from data. Migration scripts take a more liberal approach to this, in that they allow more freedom, and makes it easier to fit the tool to team practices, the requirements of the test team, the scale of the database, the legal framework in place for the data, and the security requirements. To move to an approach of bulk-loading data with Flyway, it requires more discipline in the way that data is loaded and saved, and we’ll go into this a bit more in a later article.