Documenting your Database with SQL Change Automation

Phil Factor uses SQL Change Automation and PowerShell to verify that the source code for the current database version builds successfully and, if so, to generate web-based database documentation using SQL Doc.

It would be wrong to portray SQL Change Automation (SCA) as being suitable only for epic project deployments, of the sort described in my previous article. It can do smaller tasks as well. To demonstrate, I’ll show how you can use SCA to check that the source code builds a database, and to provide a web-based documentation of the result, within the local domain. The documentation will help a team-based development because it records dependencies that otherwise only become apparent on the live database, and it exposes a range of useful properties that can’t be garnered from the build script.

The PowerShell

You are going to need to use a SQL build script as a source for this task. SCA prefers a SQL Source Control project, or SQL Compare project, but will cope with a directory containing one or more SQL Scripts that can combine to build a database. SSMS can produce these script for you, but don’t include the Data Control Language (DCL) stuff, such as the database users. SCA is canny enough to arrange and run the files in the right order, to deal with any dependencies. Databases must be built in the right order, after all.

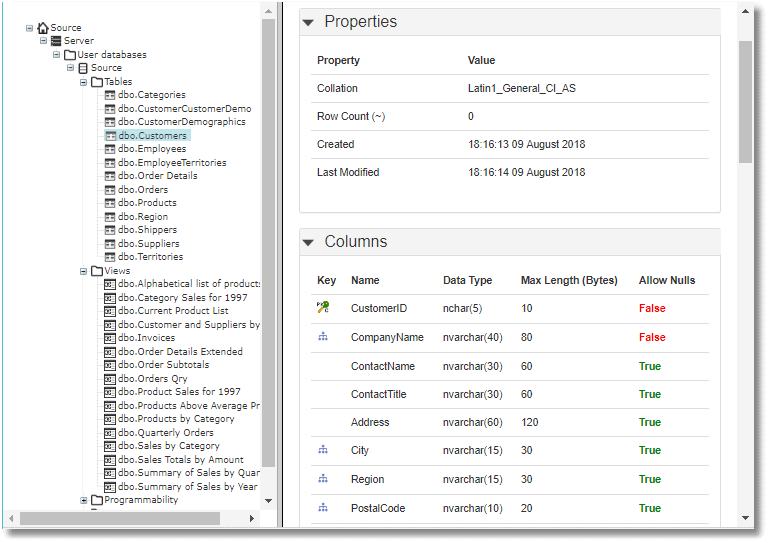

SQL Doc requires a live working database, to generate the documentation. In this script, SQL Doc uses the temporary database created by the Invoke-DatabaseBuild cmdlet. It scripts out the database objects and gives information about database properties.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 |

<# To start off the script we need to define where the temporary server is and the login. We also need to record where the script project is, and where the documentation needs to be saved to #> $instance = 'MyInstance' # the name of the server instance $credentials = 'User ID=MyName;Password=MyPassword;' #the credentials if any # leave the credentials blank if Windows Authentication #the source code 'project', consisting of one or more SQL script files $SourceCodePath = 'PathToMySourceDirectory' #the connection string. Leave alone unless you have special requirements $connection = "Data Source=$instance;$($credentials)MultipleActiveResultSets=False;Encrypt=False;TrustServerCertificate=False" # the path to the base directory for the website $where = 'PathToMyWebsiteDirectory'#fill this in of course. # the options for the validation of the database $options = '' <# first we make sure that the SCA module is imported#> Import-Module SqlChangeAutomation -ErrorAction silentlycontinue -ErrorVariable +Errors <# we now validate the database and pass the script to the New-DatabaseDocumentation cmdlet for documenting the database#> $documentation = Invoke-DatabaseBuild $SourcecodePath ` -TemporaryDatabaseServer $Connection ` -SQLCompareOptions $options | New-DatabaseDocumentation -TemporaryDatabaseServer $Connection <# The documentation is contained in a series of byte{} arrays contained in the documentation object and it is just a matter of iterating through them to save the arrays to file #> $documentation.Files.GetEnumerator() | foreach{ if (-not (Test-Path ` -PathType Container (Split-Path -Path "$($where)\$($_.Key)") ` -ErrorAction SilentlyContinue ` -ErrorVariable +Errors)) { # we create the directory if it doesn't already exist $null = New-Item ` -ItemType Directory ` -Force -Path (Split-Path -Path "$($where)\$($_.Key)") ` -ErrorAction SilentlyContinue ` -ErrorVariable +Errors; } Write-Verbose "$($where)\$($_.Key)" $type =[System.IO.Path]::GetExtension("$($where)\$($_.Key)") [io.file]::WriteAllBytes("$($where)\$($_.Key)",$_.Value) } |

The Invoke-DatabaseBuild cmdlet builds a database project by checking whether the database definition can be deployed to an empty database. It the build is successful the cmdlet creates an IProject object that represents the built project, which can be used as the input for other SQL Change Automation cmdlets. The cmdlet can, if no SQL Server is available, create a temporary copy of the database on LocalDB. Instead of creating a database, as is the case if your login doesn’t have the necessary permissions, it is possible to use an existing database as the build target, as long as you don’t care about its existing contents, or having two SCA-based scripts that use the same target running concurrently!

Assuming the build succeeds, the New-DatabaseDocumentation cmdlet then takes the output of the Invoke-DatabaseBuild cmdlet, as IProject object, and generate documentation for the schema contained within it. It creates a SchemaDocumentation object that represents the documentation for a validated database project.

To generate the documentation, the cmdlet once more creates a temporary copy of the database. The same rules apply as to the Invoke-DatabaseBuild cmdlet. This double-build makes for a slow process. It is best to avoid doing documentation as part of the main build process and instead do it separately on a schedule as an overnight process, since it has no dependencies on the main build.

The database documentation website

The resulting website is far quicker to browse than SSMS, and it provides at-a-glance details that would be difficult to get any other way, without tapping in SQL, including all the information from the extended properties.

It allows members of other teams, such as ops and governance, to get up-to-date access to what is in the build. If you have PHP on your website, it is very easy to add ways of searching the documentation, such as via ‘brute-force’ regex searches, and the results are reasonably quick. Once one gets used to the interface, it becomes an irritation if you no longer have the website documentation!

Conclusion

It takes some time to generate database documentation, so it is tempting to abandon it as a routine part of the build and release process. It is much better to do it separately, as a parallel process to the build, but with the same tools.

If you abandon the documentation, you not only make it a bit more difficult for other development team members, such as database developers and DBAs, but also you risk losing some of the DevOps exchange of information and advice that is so useful for rapid releasing and deployment.

If documentation needs to be done remotely, rather than within the local domain, then it makes sense to use the standard SCA method of adding the documentation in the NuGet build artifact package, and then unzipping the build artifact to deploy the various documentation files. I have shown how to do this in previous articles.