Improving the Quality of your Database Monitoring

Database monitoring should be as simple as possible, and yet still allow the users to drill into sufficient detail to be able to recommend a fix to the problem. The trick is to adopt a layered or 'tiered' approach.

Public-facing databases are monitored for several reasons, and there is no single solution for making the process simple but still able to meet every requirement, for all types of databases.

The support of production databases, in general, has had to become more sophisticated, in response to increasing numbers of databases and the demand for maintenance. The idea of polling each production database manually for routine checks has become increasingly impractical, which has led to the introduction of DevOps practices into the development and running of an organization’s databases. Closer liaison between development and operations, within a DevOps team, means developers are increasingly attuned to what’s required to support databases in commercial use, and to the key points that make operations easier. Operations staff, in turn, have been assisted in harnessing script-based automation to ease the increasing workload.

The greater awareness amongst developers of the operational requirements for supporting databases has allowed organizations to adopt a multi-tiered approach to maintaining services and networks. This approach aims at providing general alerts to general issues, through to potential or actual problems with a “database service”, and finally a separate layer that provides the detailed drill-down. Where the service is a relational database, the appropriate specialized tool will be one that will report in sufficient detail to allow diagnosis by specialist staff, where required, but can also be used by frontline support teams without arcane knowledge.

- Supporting an operational database based on its SLA”

- Responding to problems: the ‘layered’ approach to monitoring

- Minimizing the chances of failure

- Managing large estates

- Compliance checks

- Security

Supporting an operational database based on its SLA

Every database that is in use within an organization must be checked regularly, to ensure that it is working to the level described by its Service Level Agreement (SLA). With this, it is easy to check whether any service is getting the right level of attention. It is also an excellent way of defusing the febrile atmosphere that sometimes follows a service failure.

If the database is engaged in any sort of financial transactions, it must also work under the organization’s auditing rules. These will usually include logging of financial transactions, and some form of audit-quality database activity monitoring. It will be subject to a regular security assessment and be monitored by some form of intrusion detection system. It doesn’t matter where the computer is hosted, it must follow the same rules. There is little room for your creative streak here. The only way to support of an increasing variety of database systems, hosted in several ways, is to tie down the requirements and have a stress-free way of updating them.

The SLA will determine, to an extent, the design of the monitoring system. The system-level security requirements will have a considerable impact on how you support a database. The accountants and auditors will have a strong persuasive power over how the intrinsic way that it monitors business transactions, and how well it can detect possible data loss or data interference. Fortunately, an SLA also makes it much easier to persuade managers of the scale of budget required!

Responding to problems: the ‘layered’ approach to monitoring

Even the best-ordered network environment, armed with advanced metrics to prevent avoidable faults, will occasionally go wrong. Network boxes occasionally just die; databases are useless without connectivity. Even the best database systems will go offline, in the face of some types of hardware problems. There must always be a way of knowing of any failure as soon as possible to then allow you to react quickly.

Level 1 – Is the service alive?

If you hear raised voices from your users before the lights go red on your monitoring console, then your monitoring system isn’t working properly.

The first level of monitoring just checks the end-to-end process. Is the typical office workstation able to connect to a functioning database, or databases? Forget the how or why for the time being. For database processes, this check is like an ODBC or JDBC ‘ping’. All it needs to do is verify that it can connect and that it gets a response to a simple query.

It’s possible to do this sort of status checks via a network service, using SNMPv3 (you can think of it as a simple messaging system for black boxes on networks). At the very least, this sort of ‘ping’ system will detect whether the service can be reached from a particular point in the network.

However, I generally like to see this sort of check extended so that every database has a view or function that, in response to a SQL query, reports the simple ‘functional’ metrics that are unique to that database, such as the number of ‘shopping-baskets’ that got through to successful purchase in the last hour, the number of searches or logins, and so on. If the shopping cart or search facilities stop working, these are the things that users notice first!

An extended “level one” monitoring system like this has proved valuable to me on several occasions. It’s useful as an ‘are-you-alive’ check on database connectivity, from several different parts of the network, and has the advantage that it can be extended to check for the success of running business processes, such as an end-of-month consolidation, or for worrying log entries. It once saved me from embarrassment by alerting me quickly to the fact that a card processing system had failed on a rather visible website!

I’ve always found that scripting is the best way to monitor the end-to-end process. I use a command-line Batch script or BASH script to run the check and send any alert. Results can be saved as CSV so that they are easy to read into Excel, or markdown for messages. These scripts can be used on various locations for a ‘Cron job’ that regularly checks that the basics are working. The more independent checks the better!

At Level 1, you’ll know that the end-to-end system isn’t working somewhere between service and user. Now, at the next level, you need sufficient information to tell you the extent of the problem, and which expert to summon, or which specialized tool to use. There is, for example, no point in summoning the production DBA if it is a network fault, or the network expert if it is a mutual deadlock in the database.

Level 2 –Generic service-level monitoring

At Level 2, you monitor devices in a generic way, where possible, across the entire ‘estate’ of operations. They are just services of some sort. If you are reacting to a level 1 alert, you need to locate the fault, or at least to know roughly where you need to aim your specialized diagnostics to find out what is wrong. This can be challenging where, for example, an entire site-wide Wi-Fi mesh network fails. From level 1, you generally just know that the end-to-end system isn’t working between service and user: Now you need sufficient information to tell you the extent of the problem, and what specialized tool to use, and you need to reach as much of the network as possible. It is a different perspective, and you need it to gauge the extent of the problem. There is a world of difference between a mutual deadlock in a database, and a fire in a server-room.

Normally, level 2 systems will also include alerts and notifications, such as a the ‘failover’ of a database cluster, or RAID or some other resilience system. It will also support automatic network discovery and reporting.

At Level 2 the monitoring tool will need to be backed by a logging system so that you can capture, on a schedule, the metrics for each service and save them for analysis, comparison and projections. This will allow better evidence for capacity planning. It is at this level that it becomes possible to summarize the information into a graphical map or dashboard display. I’ve come across some spectacular ones that can provide a lot of information at a glance, though I believe Hollywood got there first.

SNMPv3 is an example of a traditional monitoring system where a specialized server, termed a ‘manager’, monitors a group of hosts or devices. The results can be collated into a hierarchy by a MIB (Management Information Base). Each managed box such as a router, printer, service or workstation executes a software component called an agent which reports information via SNMPv3 to the ‘manager’. The advantage of this is that most network devices can become an SNMPv3 entity, capable of reporting their status. The downside is that it needs special security arrangements due to the potential opportunities for intrusion. Many organizations are not keen to install agent on sensitive database servers.

However, not only can a single SQL Server be set up as an SNMP entity, but your specialized level 3 database monitoring system, such as Redgate Monitor, so that you can easily indicate at level 2 that there is a SQL Server database problem. This will allow you to implement ‘smart grouping’ of databases, such as those hosted together, where the number of systems make individual reporting impractical.

In the Level 2 category, we use a generic, as opposed to single provider, monitoring system. This type of tool is especially useful in organizations whose ‘database services’ comprise a whole range of database systems, both relational and document-based, for which the organization have issued an SLA, and so need to be monitored. For each major database system, Level 2 monitoring tools will collect a set of standard metrics for each database service, and then use a range of plugins to give to provide more detail for each specific system.

Even relational systems vary widely in how their dynamic running state is reported. Only the core declarative SQL Queries, and the principle of metadata, are well-standardized. Procedural SQL is subject to wild improvisation by the vendors, and one soon finds that metadata queries aren’t consistent. This means that there is a limit to what a generic monitoring system can achieve, so they don’t negate the need to level 3 devices.

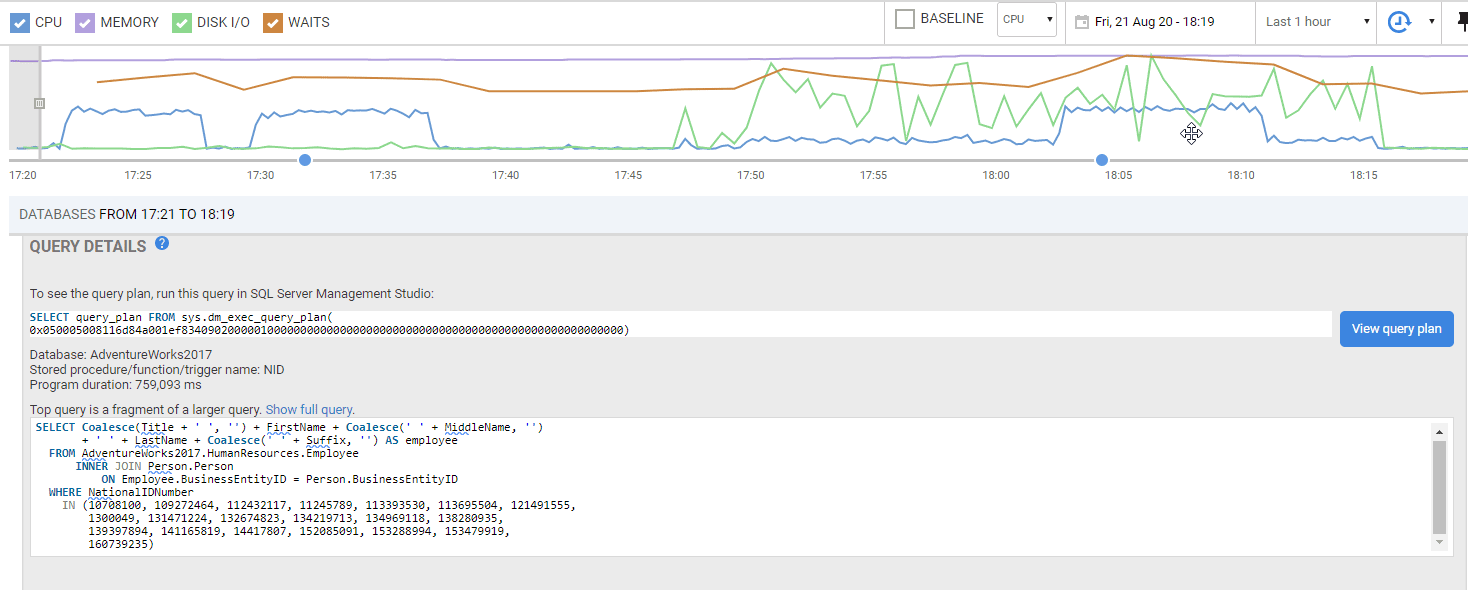

Level 3 – Specialist RDBMS diagnostics

Level 1 tells you that a business service is in trouble. At Level 2, you know what device in the network is giving trouble, and you have enough detail about the problem to be able to send a message to the appropriate expert, DBA or whoever you need to extract from their evening date or slumber. However, at level two, it is hard to provide all the metrics that are necessary for a confident diagnosis.

A level 3 monitoring system will provide detailed information and diagnostics for a particular type of service. It is not just for databases or websites, but every black box. There are few devices that can be healed without logging in via a laptop, even your washing machine or car. It isn’t just database that need ‘level 3’ to get to the root of a problem and provide a long-term solution. Even a ‘black box’ network component can be as troublesome as a toddler and require advanced, specialized diagnostics.

Every relational database system (RDBMS) is likely to require its own system of ‘extraction’ because there has never been an effective cross-RDBMS standard that is able to answer more than the simplest questions. Although one generally needs to investigate blocking processes, long-running queries and those having the most impact, many of the metrics are likely to be specific to the RDBMS, often most efficiently collected using bespoke features (such as Query Store or Extended Events, for SQL Server).

Minimizing the chances of failure

After something goes wrong with a service, there is usually an ‘autopsy’ by the IT team involved to work out what failed and why. In my experience, there are almost always ‘portents’ or signs that precede a failure. Odd things start happening that are often signs of stress. The classic one with a database is a rapidly increasing response time.

Devices, networks and servers change so frequently that it is impossible to give an exhaustive list, but the signs of a database running out of disk space is a hardy perennial, as is sector failure in a NAS. By taking time to learn the lessons from every incident, one can prevent many of the unpleasant consequential events.

In general, the activity of detecting the causes of stress in a complex system such as a database becomes part of the overall support. Unfortunately, in dysfunctional organizations, there is often far more glory to be had in putting out metaphorical fires than engaging in metaphorical fire-prevention. It is one of the sayings in operations and support that ‘everyone loves a fire-fighter’. And yet, clearly, monitoring any IT system for stresses and signs, and taking the correct action to prevent any escalation, is far more effective and efficient than fixing broken systems, so it is worth spelling out what is involved.

It is in prevention that level 1 becomes more complicated to set up and maintain. The pat answer is that someone should poll every system to check out the stress points. Not only does this become impractical as the estate of databases grows, but it is also too tedious and unrewarding for a human to do. However good your scripted database-checking system is, it must be able to collate and summarize information. You must be able to customize it to the point that you can get consolidated reports for your database applications. You can’t poll every database to inspect the logs, just in case. Your brain will eventually take preventative measures, log you off and your head will hit the keyboard as you fall into a deep sleep (a condition we used to call “working from home”).

The data gathered to answer the questions at Level 1 (are all services being provided?) and level 2 (where is the fault?) are insufficient for Level 3 because the causes of stress in a database aren’t always obvious, and we need specialist tools to check for signs of incipient failure. The same goes for your fridge or your car.

You might think that SQL Server, or any other brand of RDBMS, will behave in a similar way. All you have to do is to stare at those graphs, nodding sagely. No, you must know what to look for. Your system will be unique in some of the ways it is used or even the other systems on which it relies. The data feeds, maybe, and downstream systems. Your monitoring system will therefore need additional custom metrics for all those aspects of your system that are uncommon, but that need to be tracked, because certain patterns will indicate an incipient failure that can be prevented.

Your level 3 monitoring needs to combine those checks that are appropriate to all databases, such as signs of intrusion or shortage of disk space, with the ability to drill down to the detail. It also needs the metrics that the business understands, such as ‘invoices issued’, to detect problems with business processes such as a failed payment system. The organization that owns the database will only understand how well the database is working using terms that the business understands.

Any level 3 database monitor must be able to see the big picture, so it must be sufficiently customizable to do its own aggregation, trends and projections.

Managing large database estates

As the number of databases and platforms that must be supported and monitored expands, it becomes increasingly helpful to be able to tag services, databases, or groups of databases.

You might, for example want to distinguish Azure SQL Databases, Azure Managed Instances and Amazon RDS instances, so that it becomes much easier to see that a cloud provider has a major outage in a particular location. Equally, grouping and tagging will make it more obvious if you have a particular issue that only affects a particular hosting method or service, or only happens in a particular virtual environment.

From level 2 onwards, these tags need to be added to alerts and SNMP notifications, so that you can then put extra information in the alert details.

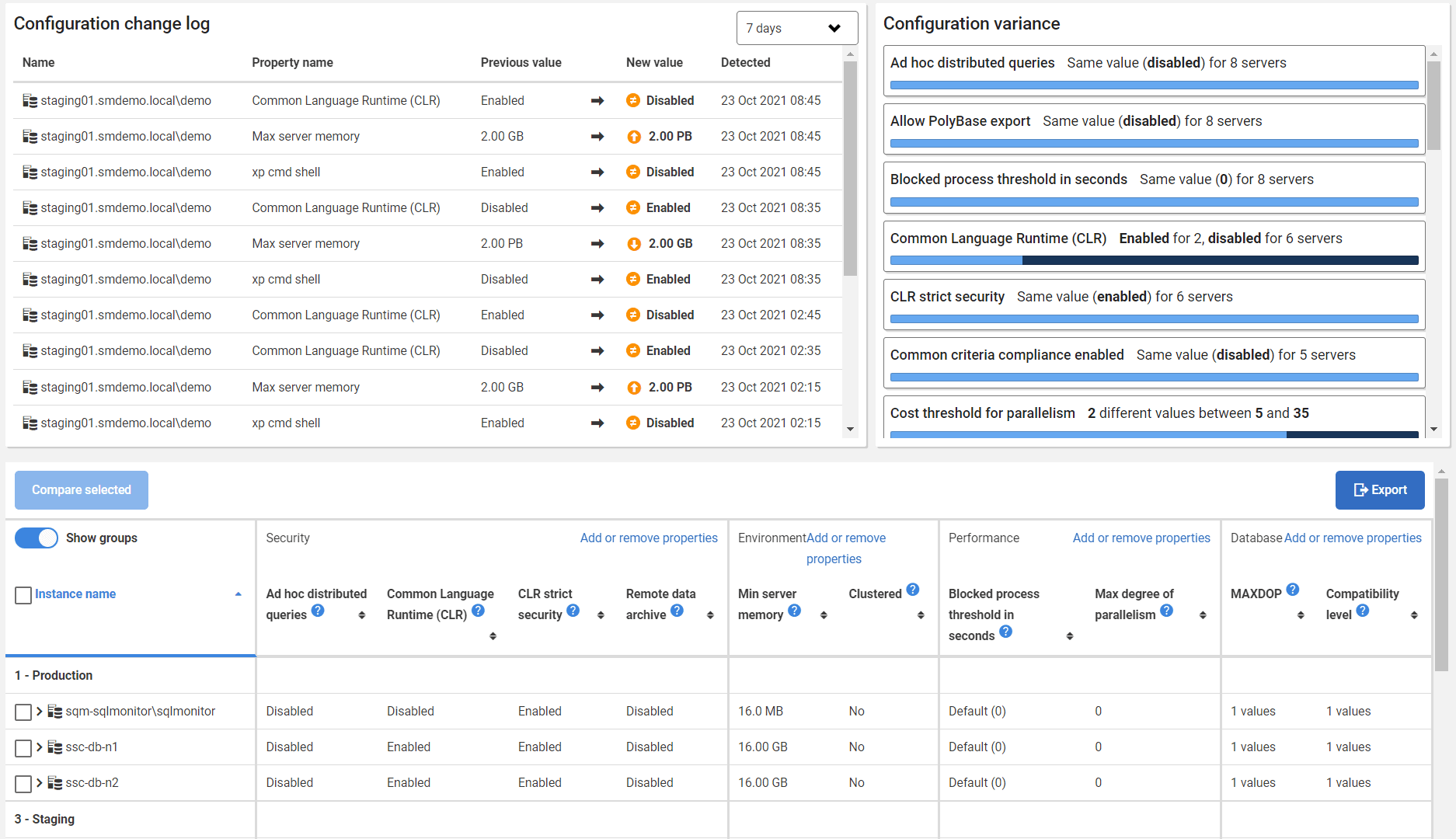

Compliance checks

It is easy to make a mistake in the configuration of a relational database such as a SQL Server instance, but it can be hard to monitor your estate of servers to spot these mistakes, or any unauthorized changes to the settings. Oracle, PostgreSQL, and SQL Server all have lot of options and some of these settings can have security implications. For example, Oracle now recommends mutual TLS (mTLS) logins with a wallet, and 2FA on top of that. SQL Server has several different security configuration settings and publish ‘best practices’ for various types of installation.

With hundreds of SQL Server installations in the estate, you would ideally need a method of checking installations of any type of database, but there is so little standardization that each RDBMS will require its own system for checking anomalies in the configuration, to be able to illustrate the differences in configuration of monitored database instances across the estate, and to detect changes.

To do this, configurations of each server need to be archived in a version control or change management system that is secure from tampering. This must be done for all the RDBMSs that are being used in production within the organization.

Security

Intrusion detection affects the entire network and so any system must be generic and live in levels 1 and 2. Sometimes the signs of intrusion will involve brute-force attacks, privilege escalation or some other anomalous activity that suggests that an intruder has gained access to the network. This must inspire a general alert because it is likely to affect all services, like a fire or a burst water main.

A SQL Injection attack is different, because it is done without needing access to the network, so the database application that is failing to parametrize its database queries properly needs to be taken offline until it is secured. Unless the SQL Injection attack was wildly successful, it will not necessarily represent an access into the network.

Specialized level 3 monitoring plays a part by checking security issues in the configuration of databases. Some of the Level 3 tools will probably include one of the standard set of penetration tests that check for vulnerabilities in database installations.

Summary

It might seem a paradox that monitoring should be as simple as possible, and yet must allow the user to drill into sufficient detail to be able to recommend a fix to the problem. The trick is to adopt a layered or ‘tiered’ approach. In the first tier, your system adopts the user perspective. Is there an end-to-end service? Either the service as agreed upon is there, or else it isn’t. If there isn’t as service, then on to level 2. This level must answer the simple question, ‘What components of the service are broken’? ‘What needs an in-depth investigation?’ Level 3 must provide the specialized tool that allows sufficient drill-down into the detail to provide the right information to get the service back into operation, whatever it is. In support terms, databases are no different from other complex network services in that they need specialized software tools.

Tools in this post

Redgate Monitor

Real-time multi-platform performance monitoring, with alerts and diagnostics